Using OpenAI's Agents SDK with Gram-hosted MCP servers

The OpenAI Agents SDK

When combined with Gram-hosted MCP servers, the Agents SDK enables you to build sophisticated agents that can interact with your APIs, databases, and other services through natural language conversations with persistent context.

This guide shows you how to connect the OpenAI Agents SDK to a Gram-hosted MCP server using Taskmaster, a full-stack CRUD application for task and project management. Taskmaster includes a web UI for managing projects and tasks, a built-in HTTP API, OAuth 2.0 authentication, and a Neon PostgreSQL database for storing data. Try the demo app

You’ll learn how to set up the connection, configure agents with MCP tools, and build conversational task management workflows.

Understanding OpenAI API options

OpenAI provides three main approaches for integrating with MCP servers:

- The Responses API: An API with a simple request-response pattern, ideal for basic tool calling and quick integrations.

- The Agents SDK (this guide): An advanced agent framework with sessions, handoffs, and persistent context that is perfect for complex conversational workflows.

- ChatGPT Connectors: Connectors offer direct ChatGPT integration to end users via a web UI.

If you’re unsure which approach fits your needs, start with the Responses API guide for simpler implementations or try ChatGPT Connectors for a web UI solution.

Prerequisites

To follow this tutorial, you need:

- A Gram account

- A Taskmaster MCP server set up and configured

- An OpenAI API key

- A Python environment set up on your machine

Creating a Taskmaster MCP server

Before connecting the OpenAI Agents SDK to a Taskmaster MCP server, you first need to create one.

Follow the guide to creating a Taskmaster MCP server, which walks you through:

- Setting up a Gram project with the Taskmaster OpenAPI document

- Getting a Taskmaster API key from your instance

- Configuring environment variables

- Publishing your MCP server with the correct authentication headers

Once you have your Taskmaster MCP server configured, return here to connect it to the OpenAI Agents SDK.

Connecting Agents SDK to your Gram-hosted MCP server

The OpenAI Agents SDK supports MCP servers through the HostedMCPTool class. Here’s how to connect to your Gram-hosted MCP server:

Installation

First, install the required packages:

pip install openai-agentsSet your OpenAI API key and Taskmaster credentials:

export OPENAI_API_KEY=your-openai-api-key-here

export MCP_TASKMASTER_API_KEY=your-taskmaster-api-key

export GRAM_KEY=your-gram-api-key # Optional: only needed for private MCP serversCode Examples

Throughout this guide, replace your-taskmaster-slug with your actual MCP

server URL and update the header names to match your server configuration from

the guide to creating a Taskmaster MCP

server.

Basic connection (public server)

Here’s a basic example using a public Gram MCP server:

import os

from agents import Agent, Runner, HostedMCPTool

# Configure the Taskmaster MCP tool

taskmaster_tool = HostedMCPTool(

tool_config={

"type": "mcp",

"server_label": "taskmaster",

"server_url": "https://app.getgram.ai/mcp/your-taskmaster-slug",

"headers": {

"MCP-TASKMASTER-API-KEY": os.getenv("MCP_TASKMASTER_API_KEY")

},

"require_approval": "never"

}

)

# Create an agent with the MCP tool

agent = Agent(

name="Task Manager",

instructions="You help users manage their tasks and projects using Taskmaster. Be helpful and conversational.",

tools=[taskmaster_tool]

)

# Run the agent

result = Runner.run_sync(agent, "What tasks do I have?")

print(result.final_output)Authenticated connection

For authenticated Gram MCP servers, include the appropriate authentication headers. The exact format varies by MCP server.

import os

from agents import Agent, Runner, HostedMCPTool

# Load Taskmaster credentials from environment

GRAM_KEY = os.getenv("GRAM_KEY")

if not GRAM_KEY:

raise ValueError("Missing Gram environment variable")

# Configure authenticated Taskmaster MCP tool

taskmaster_tool = HostedMCPTool(

tool_config={

"type": "mcp",

"server_label": "taskmaster",

"server_url": "https://app.getgram.ai/mcp/your-taskmaster-slug",

"headers": {

"Authorization": f"Bearer {GRAM_KEY}",

},

"require_approval": "never"

}

)

agent = Agent(

name="Task Manager",

instructions="You help users manage their tasks and projects using Taskmaster.",

tools=[taskmaster_tool]

)

result = Runner.run_sync(agent, "Create a new task called 'Review OpenAI Agents SDK integration'")

print(result.final_output)Understanding the configuration

Each parameter in the tool_config does the following:

type: "mcp"specifies that this is an MCP tool.server_labeladds a unique identifier for your MCP server.server_urladds your Gram-hosted MCP server URL.headersadds authentication headers (optional for public servers).require_approvalcontrols tool call approval behavior.

Advanced agent features

The Agents SDK provides several advanced features that go beyond simple tool calling.

Persistent sessions

Unlike the Responses API, agents maintain conversation history automatically:

import os

from agents import Agent, Runner, HostedMCPTool

taskmaster_tool = HostedMCPTool(

tool_config={

"type": "mcp",

"server_label": "taskmaster",

"server_url": "https://app.getgram.ai/mcp/your-taskmaster-slug",

"headers": {

"MCP-TASKMASTER-API-KEY": os.getenv("MCP_TASKMASTER_API_KEY")

},

"require_approval": "never"

}

)

agent = Agent(

name="Project Assistant",

instructions="""You are a project management assistant. You help users:

- Create and organize tasks

- Track project progress

- Set priorities and deadlines

Remember context from previous interactions.""",

tools=[taskmaster_tool]

)

# First interaction

result1 = Runner.run_sync(agent, "Create a project called 'Website Redesign'")

print("First:", result1.final_output)

# Second interaction - agent remembers the project

result2 = Runner.run_sync(agent, "Add a task to that project: 'Design new homepage'")

print("Second:", result2.final_output)

# Third interaction - agent maintains full context

result3 = Runner.run_sync(agent, "What tasks are in the Website Redesign project?")

print("Third:", result3.final_output)Tool approval workflows

For production environments, you can implement approval workflows:

import os

from agents import Agent, Runner, HostedMCPTool, MCPToolApprovalRequest, MCPToolApprovalFunctionResult

# Define which tools require approval

SAFE_TOOLS = {"list_tasks", "get_project"}

RESTRICTED_TOOLS = {"delete_task", "delete_project"}

def approve_tool_call(request: MCPToolApprovalRequest) -> MCPToolApprovalFunctionResult:

tool_name = request.data.name

if tool_name in SAFE_TOOLS:

return {"approve": True}

elif tool_name in RESTRICTED_TOOLS:

return {"approve": False, "reason": "Deletion operations require manual approval"}

else:

return {"approve": True}

taskmaster_tool = HostedMCPTool(

tool_config={

"type": "mcp",

"server_label": "taskmaster",

"server_url": "https://app.getgram.ai/mcp/your-taskmaster-slug",

"headers": {

"MCP-TASKMASTER-API-KEY": os.getenv("MCP_TASKMASTER_API_KEY")

},

"require_approval": "always"

},

on_approval_request=approve_tool_call

)

agent = Agent(

name="Secure Task Manager",

instructions="You help manage tasks with safety checks in place.",

tools=[taskmaster_tool]

)Error handling and retries

The Agents SDK provides built-in error handling:

import os

from agents import Agent, Runner, HostedMCPTool

taskmaster_tool = HostedMCPTool(

tool_config={

"type": "mcp",

"server_label": "taskmaster",

"server_url": "https://app.getgram.ai/mcp/your-taskmaster-slug",

"headers": {

"MCP-TASKMASTER-API-KEY": os.getenv("MCP_TASKMASTER_API_KEY")

},

"require_approval": "never"

}

)

agent = Agent(

name="Resilient Task Manager",

instructions="""You help manage tasks. If a tool call fails:

1. Explain what went wrong

2. Suggest alternative approaches

3. Try again if appropriate""",

tools=[taskmaster_tool]

)

try:

result = Runner.run_sync(agent, "Create a task with invalid data")

print(result.final_output)

except Exception as e:

print(f"Agent error: {e}")Building conversational workflows

The following complete example demonstrates building a conversational task management workflow:

import os

from agents import Agent, Runner, HostedMCPTool

def create_task_manager_agent():

"""Create a task management agent with Taskmaster integration"""

taskmaster_tool = HostedMCPTool(

tool_config={

"type": "mcp",

"server_label": "taskmaster",

"server_url": "https://app.getgram.ai/mcp/your-taskmaster-slug",

"headers": {

"MCP-TASKMASTER-API-KEY": os.getenv('MCP_TASKMASTER_API_KEY')

},

"require_approval": "never"

}

)

return Agent(

name="Taskmaster Pro",

instructions="""You are Taskmaster Pro, an expert project management assistant.

Your capabilities:

- Create and manage projects and tasks

- Set priorities, deadlines, and track progress

- Provide project insights and recommendations

- Help with workflow optimization

Be conversational, helpful, and proactive in suggesting improvements.

Always confirm important actions before executing them.""",

tools=[taskmaster_tool]

)

def interactive_session():

"""Run an interactive task management session"""

agent = create_task_manager_agent()

print("🎯 Taskmaster Pro is ready! Type 'quit' to exit.\n")

while True:

user_input = input("You: ").strip()

if user_input.lower() in ['quit', 'exit', 'bye']:

print("Taskmaster Pro: Goodbye! Your tasks are in good hands. 👋")

break

if not user_input:

continue

try:

result = Runner.run_sync(agent, user_input)

print(f"Taskmaster Pro: {result.final_output}\n")

except Exception as e:

print(f"Taskmaster Pro: Sorry, I encountered an error: {e}\n")

if __name__ == "__main__":

interactive_session()Tool filtering and permissions

You can control which tools are available to your agent:

import os

from agents import Agent, Runner, HostedMCPTool

# Create MCP tool with specific tool restrictions

taskmaster_tool = HostedMCPTool(

tool_config={

"type": "mcp",

"server_label": "taskmaster",

"server_url": "https://app.getgram.ai/mcp/your-taskmaster-slug",

"headers": {

"MCP-TASKMASTER-API-KEY": os.getenv("MCP_TASKMASTER_API_KEY")

},

"allowed_tools": [

"taskmaster_get_tasks",

"taskmaster_create_task",

"taskmaster_update_task",

"taskmaster_get_projects"

# Note: Exclude deletion tools for read-only access

],

"require_approval": "never"

}

)

agent = Agent(

name="Read-Only Task Viewer",

instructions="You can view and create tasks, but cannot delete anything.",

tools=[taskmaster_tool]

)For example, Taskmaster MCP servers provide tools like taskmaster_get_tasks, taskmaster_create_task, taskmaster_delete_task, taskmaster_get_projects, and taskmaster_create_project. The exact tool names depend on your MCP server configuration.

Testing your integration

Validating MCP server connectivity

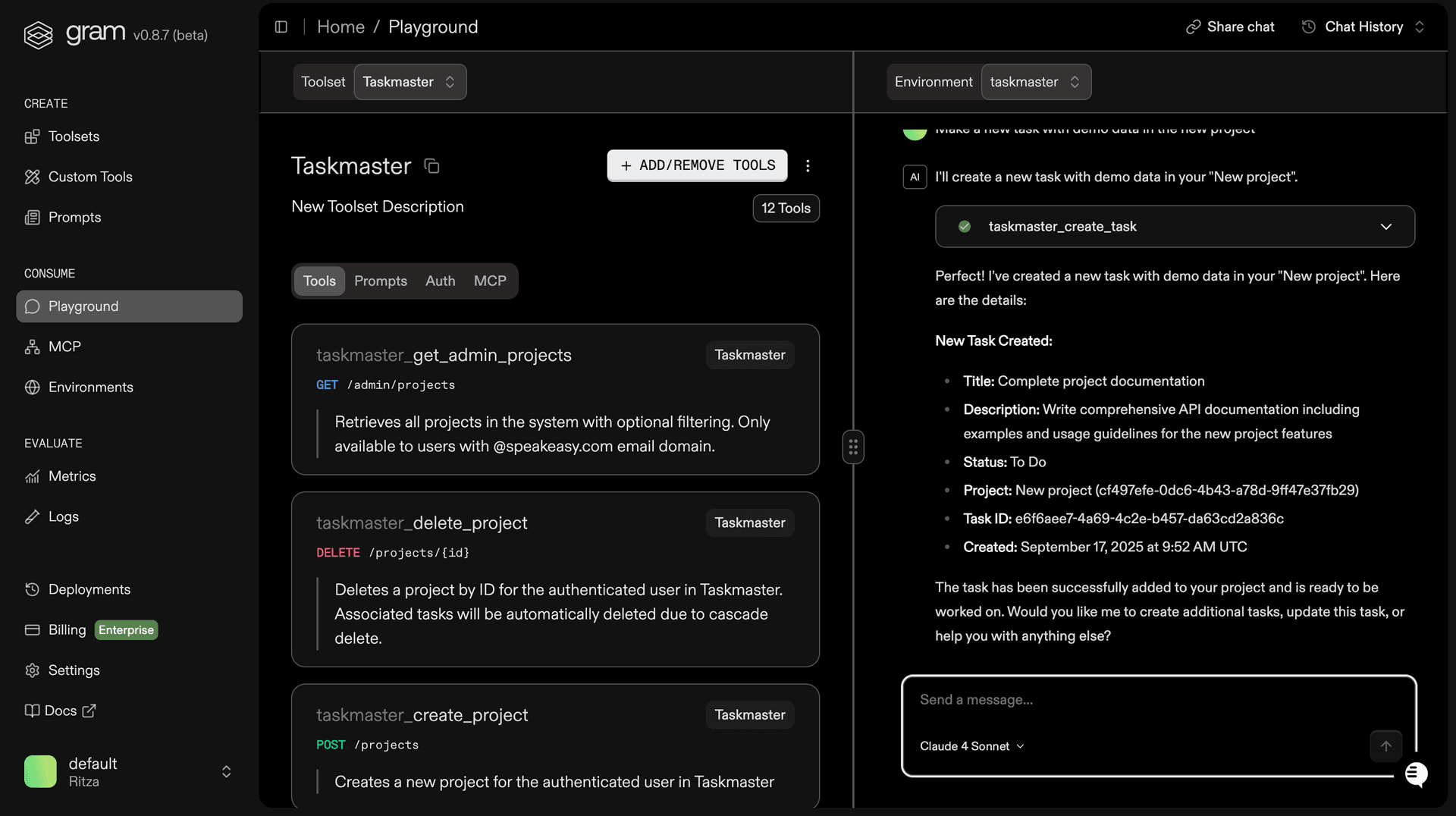

Before building complex workflows, test your Gram MCP server in the Gram Playground

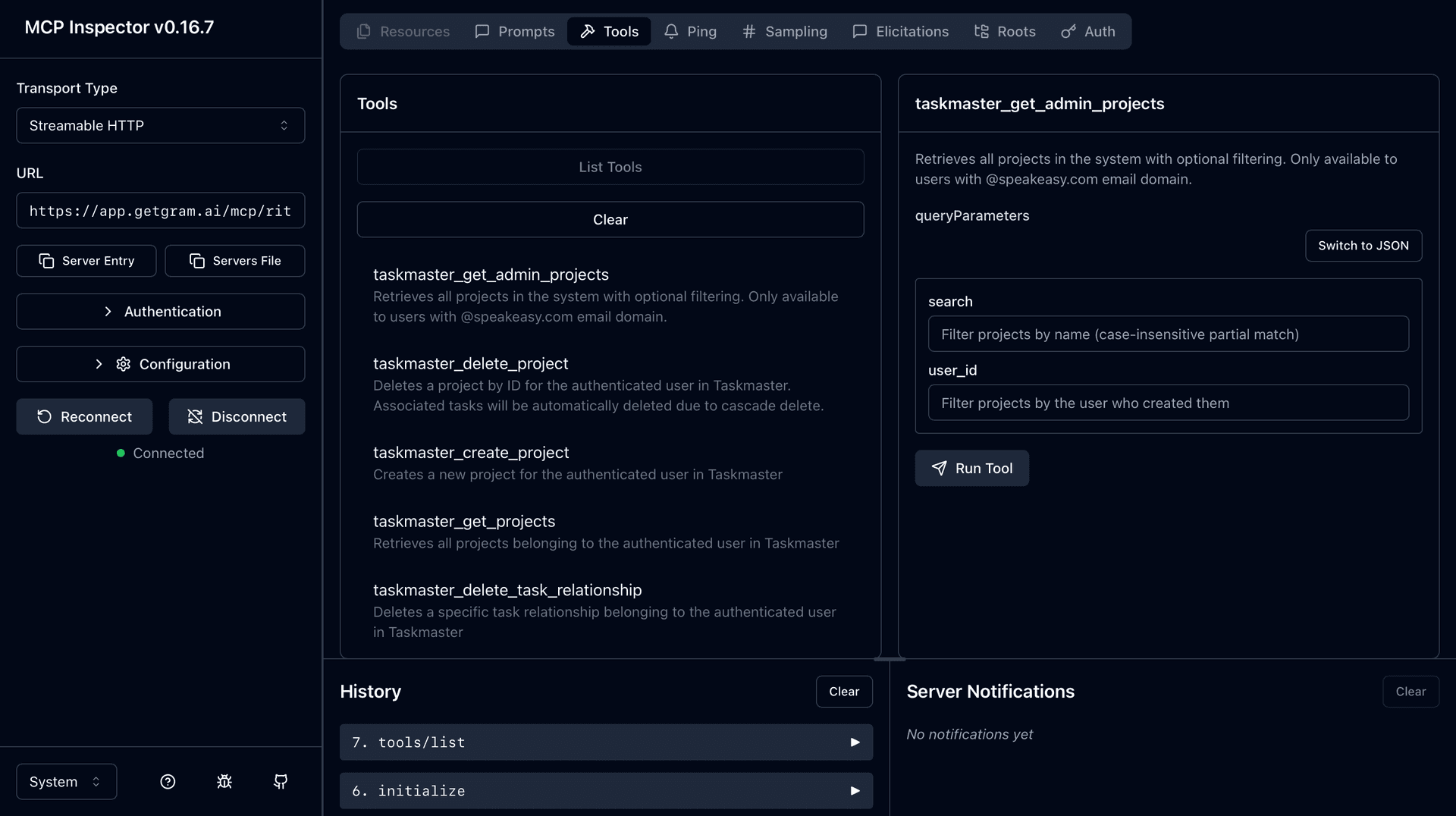

Using the MCP Inspector

You can also use the MCP Inspector

npx -y @modelcontextprotocol/inspectorWhen the browser opens:

- In the Transport Type field, select Streamable HTTP (not the default stdio).

- Enter your server URL:

https://app.getgram.ai/mcp/your-taskmaster-slug. - For authentication, add API Token Authentication:

- Header name:

MCP-TASKMASTER-API-KEY - Bearer token: Your Taskmaster API key

- Header name:

- Click Connect to test the connection.

Note: Taskmaster servers use custom authentication headers that may not be fully supported by the standard MCP Inspector interface. For guaranteed testing, use the Gram Playground or the code examples in this guide.

Debugging agent interactions

The Agents SDK provides built-in tracing for debugging:

from agents import Agent, Runner, HostedMCPTool

# Enable detailed logging

import logging

logging.basicConfig(level=logging.DEBUG)

agent = Agent(

name="Debug Agent",

tools=[taskmaster_tool]

)

# Agent interactions will show detailed traces in the logs

result = Runner.run_sync(agent, "List my tasks")What’s next

You now have the OpenAI Agents SDK connected to your Gram-hosted MCP server with advanced task management capabilities.

The Agents SDK’s features, like persistent sessions, approval workflows, and built-in error handling, make it ideal for building production-ready conversational agents that can handle complex workflows.

Ready to build your own MCP server? Try Gram today and see how easy it is to turn any API into agent-ready tools that work with both OpenAI and Anthropic models.

Last updated on